Large Volume Data #Demand Side

In my last post I analysed requirements to be met to fully utilize new often updated large volume data. Now, after discussing about the topic for two days I have some further thougths. Mostly about demand.

Big (environmental) data producers have surprisingly same kind of thoughts about what is needed. As I pointed out in the previous post, data volumes are growing fast and data is updated faster and faster. For example ECMWF (The European Centre for Medium-Range Weather Forecasts) receives about 300 million observations and produces over 8 TB new data in a day.

It’s not hard to produce this large data. It’s hard to handle it.

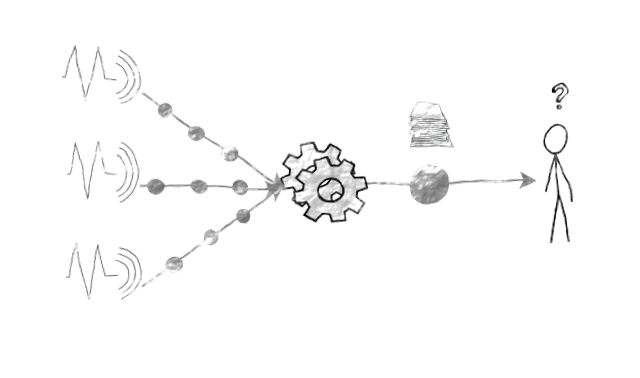

Traditional workflow is pretty straight forward:

- you get some new information

- you run you model and get some data

- you trigger product generation and

- push products to users.

This don’t work any more. Measurements can’t be seen as a single event but as a constant flow of raw data. Like stream of fotons passing by, you are not interested about them, you don’t try to store them. But they give you a great amount of information about current moment. This is why all this data is produced — more light, more accurate picture. Users want information, not data. And they want it when they need it — not when next foton happens to hit their retina.

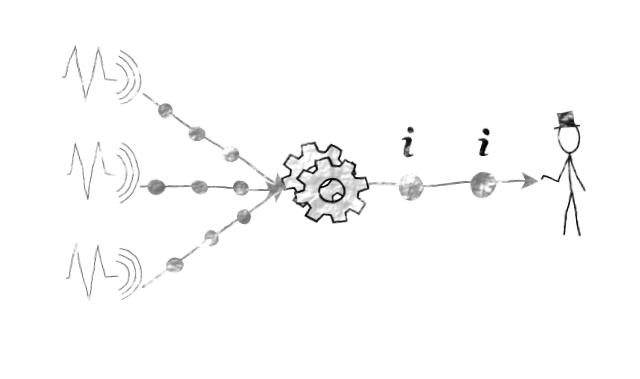

Huge amount of measurements and large data volumes have been driving a paradigm change from push to pull. Instead of good-old ftp, users are given interfaces to request information whenever they need it, trusting that they always get the best available information at the time. Data can be fetched when needed and only when needed.

Data producers have also had to overhaul their product generation architectures. Five years ago Finnish Meteorological Institute was producing over million images in a day to be fetched only five million times before they were outdated. Nowadays, more and more products are generated on-demand only when users request for them.

On-demand paradigm can be also utilized while processing information. Great amount of post-processing can be done only when users want to investigate a phenomenon of their interest. Take a look at NOAA’s version for example.

So how this is achieved? In the last post I listed three basic requirements:

- standardisation and harmonisation,

- rich interfaces to retrieve the data (even with a cost of ease of use) and

- bringing users to data by providing processing capabilities where the data is located.

The truth can still be found from there. With these guidelines anyone can become as an information island in the sea of data.

Lähteet:

http://www.copernicus.eu/sites/default/files/library/Big_Data_at_ECMWF_01.pdf

http://www.ncdc.noaa.gov/wct/