Monthly Archives: tammikuu 2016

Future Infrastructure for Large Data Sets

Next week I’m attending a highly interesting EUMETSAT1 Data Services Workshop to find future path for providing satellite data dissemination and processing capabilities for environmental satellite data. Here’s some considerations about the topic.

Traditionally data dissemination has been file based. Large data sets have been chopped into peaces and delivered via ftp server or suchlike. NOAA’s GFS dissemination system2 is a typical example of traditional way to provide data.

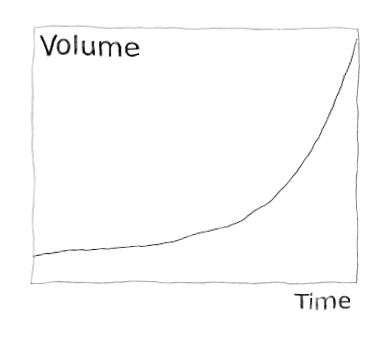

Today, when data sets grow bigger and bigger3 and update frequency gets denser and denser, traditional way is not enough. When handling large often updated data sets, I/O (both network and disk) is a bottleneck. That’s why there should be as little copying and transferring data as possible. To achieve this, three requirements should be met:

1) Data have to be in a standard and open format with a good software support so that data users don’t have to convert data to other formats.

Providing a good open standard format is a domain specific problem. It’s still a good principle for software developers to support original formats and transform data to data model required by software on-demand. Converting everything to software specific data format may first seem seductive but becomes quickly a bottleneck.

2) Users need to be given possibility to retrieve only subset of the data according to their interest. Interest (of area for example) may change routinely (consider for example a moving ship in Arctic) and thus retrieving subset need to be automatic.

In geospatial domain Open Geospatial Consortium (OGC)4 provides a good set of interface standards to follow. WMS5, WFS6 and WCS7 standards are enough for most cases. It’s notable that these standards are soon to be extended with PubSub standard8 which helps a dissemination of data.

In industry use prober SLA is required, but since the OGC services can be chained and thus data provider does not need to work as a backend of all clients. This mean that request limits can be relatively tight.

3) When possible, users can be given access to the data on it’s original source or near it. NOAA’s Data Alliance program9 and Landsat Program110 are excellent examples of this. Users using Amazon AWS may attach the volumes containing open data set11 to their server instances and use the data without copying or converting the data at all. Google Cloud Platform12 provides data in it’s services also.

Producers of large data sets have a significant ICT infrastructure of their own. It should not be tremendous task to open some processing capacity for users. Users could purchase virtual servers with a raw data reading access or do processing via WPS12.

As Jason Fried noted, you should always sell you by-products13.

1 http://www.eumetsat.int/website/home/index.html

2https://www.ncdc.noaa.gov/data-access/model-data/model-datasets/global-forcast-system-gfs

3 http://www.copernicus.eu/sites/default/files/library/Big_Data_at_ECMWF_01.pdf

4 http://www.opengeospatial.org/

5 http://www.opengeospatial.org/standards/wms

6 http://www.opengeospatial.org/standards/wfs

7 http://www.opengeospatial.org/standards/wcs

8 http://www.opengeospatial.org/projects/groups/pubsubswg

9 https://data-alliance.noaa.gov/

10 https://aws.amazon.com/public-data-sets/

11 https://cloud.google.com/noaa-big-data/

12 http://www.opengeospatial.org/standards/wps

13 https://signalvnoise.com/posts/1620-sell-your-by-products